How economists turned to the study of collective decision-making after World War II, faced many impossibilities, and lost interest after solving them

A few months ago, I attended a conference on Quadratic Voting (QV) organized by Glen Weyl and Eric Posner. Its purpose was to study the theoretical and applied aspect of their new voting procedure, in which votes can be bought for a price equal to the square of the number of votes one wants to cast. The money is then split between voters, which Weyl, Steven Lalley and Posner demonstrate efficiently takes into account the intensity of voters’ preferences and provides a cure to the tyranny of majority in a variety of public, corporate and court decisions. The conference was unusual, not just because it brought together economists, political scientists, historians, ethicists and cryptographers to reflect on the ethical, legal and security challenges raised by the implementation of QV. It was unusual because, except for a brief stint (1950-1980), economists have traditionally never cared much about collective decision. Or so is the tentative conclusion Jean-Baptiste Fleury and I reached in the paper we wrote for the conference and just revised.

The 1950s: hopes, impossibilities, and consensus

Historians have construed economists’ rising interest in collective decision after the war as an attempt to rescue the foundations of American capitalist democracy from the Soviet Threat. It has also been interpreted as an imperialist foray into the province of political scientists and social psychologists. These perspectives however miss the most salient transformation of economics in that period, e.g. that every American economist became directly enmeshed with policy-making (with exception of French-bred Debreu, maybe). During the War only, Milton Friedman was in charge of identifying the right amount of taxes at the Treasury while Richard Musgrave looked for ways to contain inflation at the Federal Research Board, Paul Samuelson devised schemes to improve resources allocation at the National Resources Planning Board and Howard Bowen worked at the Department of Commerce. And this was, more that philosophical agenda or imperialism, what got economists to think about collective decisions.

Historians have construed economists’ rising interest in collective decision after the war as an attempt to rescue the foundations of American capitalist democracy from the Soviet Threat. It has also been interpreted as an imperialist foray into the province of political scientists and social psychologists. These perspectives however miss the most salient transformation of economics in that period, e.g. that every American economist became directly enmeshed with policy-making (with exception of French-bred Debreu, maybe). During the War only, Milton Friedman was in charge of identifying the right amount of taxes at the Treasury while Richard Musgrave looked for ways to contain inflation at the Federal Research Board, Paul Samuelson devised schemes to improve resources allocation at the National Resources Planning Board and Howard Bowen worked at the Department of Commerce. And this was, more that philosophical agenda or imperialism, what got economists to think about collective decisions.

For economists doing policy-relevant work in these years were caught into a web of contradictory requirements. On the one hand, policy work required working with policy ends or objective functions. John Hicks and Nicholas Kaldor’s attempts to make welfare judgments on the sole basis of ordinal utilities and Pareto optima (no interpersonal utility comparison allowed) were largely derided as impractical by their American colleagues. Working with values seemed inescapable, but it fueled the already strong suspicion that economists, like all social scientists, were incapable of producing non-ideological knowledge. It was the reason why those physicists who architected the National Science Foundation, refused to endow it with social science division, the reason why several Keynesian economists, including Franco Modigliani and Bowen, were fired from the University of Illinois by Mccarthyites, the argument invoked by critics of Samuelson’s “socialist” textbook, a cause for Lawrence Klein’s move to the UK. Doing applied economics felt like squaring the circle.

A perceived way out of this quandary was to derive the policy objectives from the individual choices of citizens. Economists’ objectivity would thus be preserved. But the idea, clever as it was, appeared difficult to implement. Arrow showed that aggregating individual orderings in a way that would satisfice 5 “reasonable” axioms, including non-dictatorship, was bound to fail. Samuelson equally played with his colleagues’ nerves. In a 2,5-pages paper, he came up with a new definition of public goods that allowed him to find those conditions for optimal provision that public finance theorists had searched for decades, only to dash hopes on the next page: the planner needs to know the preferences of citizens to calculate the sum of marginal benefits, Samuelson explained, but the latter have an incentive to lie. Assuming you have a theory of how to ground policy into individuals’ preferences, the information necessary to implement it will not be revealed.

Economists were thus left with no other choice but to argue that the policies they advocated relied on a “social consensus.” That is how Musgrave and Friedman found themselves strangely agreeing that tax and monetary policies should contribute to “the maintenance of high employment and price-level stability.” Samuelson likewise claimed that the values imposed on the Bergson social welfare function he publicized were “more or less tacitly acknowledged by extremely divergent schools of thoughts,” Dahl And Lindblom pointed that because of a kind of “social indoctrination,” American citizens shared a common set of values. And, for altogether different reasons, James Buchanan also advocated consensus as the legitimate way to choose policies.

The 1960s: conflict, more pitfalls, and hopeless practitioners

Advocating a “consensus” became more difficult as the country was increasingly torn by disagreement over the Vietnam intervention, students’ protest, urban riots and the Civil Rights Movement. And it was a big problem, because Johnson’s response to the social upheaval was to ask for more policy expertise. His War on Poverty created a new fancy and well-funded discipline, policy analysis. But little guidance was offered to applied economists in charge of implementing new policies and evaluating them. Instead, normative impossibilities were supplemented with a flow of new studied emphasizing the pitfalls of real decision-making processes. Public (and business) decisions were riddled with bureaucratic biases, free-riding, rent-seeking, logrolling, voting paradoxes and cycles. Many researchers attended conference in “non-market decision making,” later renamed Public Choice. Their architects, among whom Buchanan and Gordon Tullock, emphasized that the costs of coordinating through markets should be balanced against the costs of public intervention, and the pitfalls of public decision-making was one of them.

Advocating a “consensus” became more difficult as the country was increasingly torn by disagreement over the Vietnam intervention, students’ protest, urban riots and the Civil Rights Movement. And it was a big problem, because Johnson’s response to the social upheaval was to ask for more policy expertise. His War on Poverty created a new fancy and well-funded discipline, policy analysis. But little guidance was offered to applied economists in charge of implementing new policies and evaluating them. Instead, normative impossibilities were supplemented with a flow of new studied emphasizing the pitfalls of real decision-making processes. Public (and business) decisions were riddled with bureaucratic biases, free-riding, rent-seeking, logrolling, voting paradoxes and cycles. Many researchers attended conference in “non-market decision making,” later renamed Public Choice. Their architects, among whom Buchanan and Gordon Tullock, emphasized that the costs of coordinating through markets should be balanced against the costs of public intervention, and the pitfalls of public decision-making was one of them.

Practitioners’ disarray was almost tangible. The proceedings of the 1966 conference on the “analysis of the public sector” (one of the first books titled Public Economics) included endless discussions of the choice of an objective function to guide policy makers. Julius Margolis summarized the debates as a rift between those who considered the government as an independent social body endowed with his own views, and those who believed his decisions represented a social ordering derived from the aggregation of individual preferences. These divisions found an echo in applied debates, in particular on weighing in cost-benefit analysis. Harvard’s Otto Eckstein, for instance, believed that “in no event should the technician arrogate the weighting of objectives to himself,” and that the parliament should be provided with a matrix listing costs and benefits of various environmental projects with regards to alternative objectives (flood protection, transportation, health, leisure). His proposal was defeated. Welfare economist Jerome Rothenberg likewise attempted to revamp the CBA techniques he used to evaluate urban renewal programs. The weights he ascribed to groups of citizens reflected the score of their representatives in the last congress election, which he believed tied his analysis to “central decision-making processes in a representative democracy.”

Practitioners’ disarray was almost tangible. The proceedings of the 1966 conference on the “analysis of the public sector” (one of the first books titled Public Economics) included endless discussions of the choice of an objective function to guide policy makers. Julius Margolis summarized the debates as a rift between those who considered the government as an independent social body endowed with his own views, and those who believed his decisions represented a social ordering derived from the aggregation of individual preferences. These divisions found an echo in applied debates, in particular on weighing in cost-benefit analysis. Harvard’s Otto Eckstein, for instance, believed that “in no event should the technician arrogate the weighting of objectives to himself,” and that the parliament should be provided with a matrix listing costs and benefits of various environmental projects with regards to alternative objectives (flood protection, transportation, health, leisure). His proposal was defeated. Welfare economist Jerome Rothenberg likewise attempted to revamp the CBA techniques he used to evaluate urban renewal programs. The weights he ascribed to groups of citizens reflected the score of their representatives in the last congress election, which he believed tied his analysis to “central decision-making processes in a representative democracy.”

The 1970s/early 1980s: collective decision unchained

The 1970s brought better news. Theoretical impossibilities were solved. Amartya Sen proposed to enlarge the informational basis underwriting social choices to interpersonal comparison of cardinal utilities, preference intensity, and other non-welfarist characteristics of individuals. At Northwestern, the likes of John Ledyard, Ted Groves, Martin Loeb, Mark Sattertwaite, Roger Myerson and Paul Milgrom (and Clark at Chicago and Maskin at MIT) built on previous work by William Vickrey, Leonid Hurwicz or Jacob Marschak to trump Samuelson’s revelation problem. They developed mechanisms that induced agents’ to reveal their true information by forcing them to bear the marginal costs of their action and applied them to taxes and car crash deterrence. These ideas became more powerful after Vernon Smith and Charles Plott, among others, showed that experiments enabled better institutional design and test bedding.

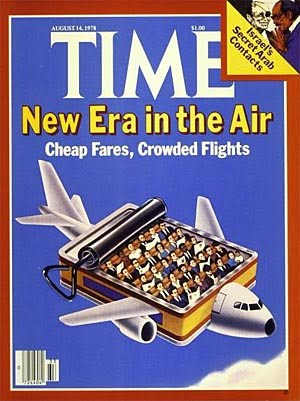

The combination of new mechanism design expertise and experimental economics seemed promising, and changed in policy regimes immediately gave economists ample opportunity to sell it to public, then private clients. Johnson’s interventionist activism had given way to an approach to policies which favored market-like incentives over “command-and-control” techniques, and to Reagan’s “regulatory relief.” The use of CBA, now compulsory, was redirected toward the identification of costly policies. Experimentalists and market designers were asked to implement more efficient ways of making collective decisions to allocate airport slots, public television programs, natural gas, and resources in space stations. The 1994 FCC auctions became the poster child for the success of this new approach.

The combination of new mechanism design expertise and experimental economics seemed promising, and changed in policy regimes immediately gave economists ample opportunity to sell it to public, then private clients. Johnson’s interventionist activism had given way to an approach to policies which favored market-like incentives over “command-and-control” techniques, and to Reagan’s “regulatory relief.” The use of CBA, now compulsory, was redirected toward the identification of costly policies. Experimentalists and market designers were asked to implement more efficient ways of making collective decisions to allocate airport slots, public television programs, natural gas, and resources in space stations. The 1994 FCC auctions became the poster child for the success of this new approach.

The strange marginalization of collective decision after 1980

By the early 1980s, economists knew how to analyze and transform collective decision processes. And clients, public and private, wanted this expertise. It is therefore surprising that it was in that decade that economists’ interest in collective decision began to wane. The topic did not disappear altogether, but it largely became a background concern, or something pursued in boundary communities and published in field journals only. How this happened is not fully clear to us, but the explanations we toy with include:

1) New client-experts relations (and the Bayesian game-theoretic framework) made economists more like engineers whose role was to provide the means to fulfill given aims rather than thinking more broadly about how these ends were constructed

2) Public and private demands were for policies or mechanisms emulating markets, that is, mechanisms to coordinate individual decisions rather than aggregate them.

3) Antony Atkinson and Joseph Stiglitz’s Lectures in Public Economics exemplified (and influenced?) the rise of general equilibrium models where government objectives were represented by an exogenously determined social welfare function. This practice pushed collective decision concerns outside economic models, and those economists studying the origins and values behind these functions (social choice theorists) were increasingly marginalized. The scope of public choice broadened ways beyond Buchanan’s constitutional program. Collective decision wasn’t the unifying concern of the field anymore.

4) Research in collective decision never stabilized within economics. As it moved to the background of economists’ mind, this expertise was (re)claimed by political scientists, law specialists, philosophers and public policy scholars. These departments are where most “collective decision” courses are now offered.

I do not mean that collective decision is a topic now absent from economists’ mind. It still sits on many social choice and public choice economists’ mind. New voting procedures such as storable votes are being investigated, and public economists have recently engaged in improving how social welfare functions reflect society’s concern with fairness. Still, the determination of collective objective is absent from most standard models in micro and macro. What the consequences on economic expertise are is a period of distrust toward elections is not a question for me to answer. But investigating how the practice of using a exogenous social welfare function disseminated during the 1980s is definitely the next step to a better understanding of the present situation.

Full paperhere.